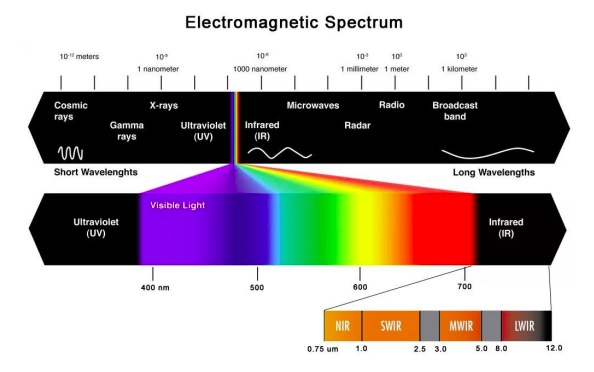

While we humans can only see what we’ve named to be visible light, bees can see UV light! Some camera sensors register IR wavelengths! Some cameras can sense both visible light and on through NIR and SWIR.

In this piece we focus on applications that benefit from combined VIS-SWIR solutions, from 400 nm through 2.5 nm.

Example applications

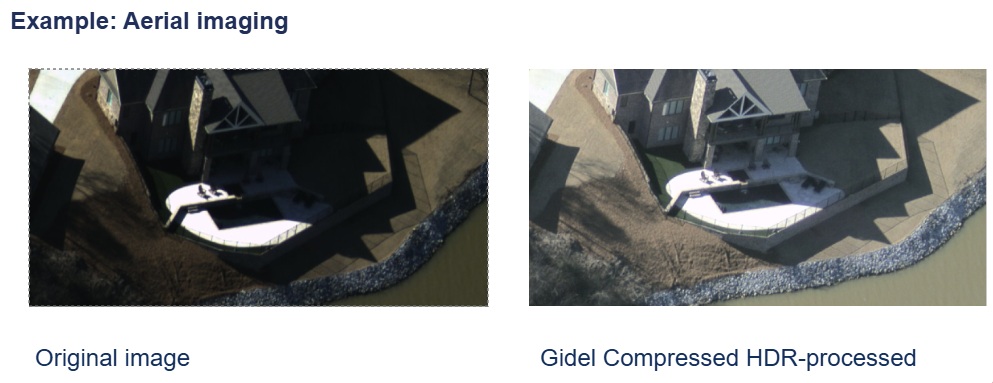

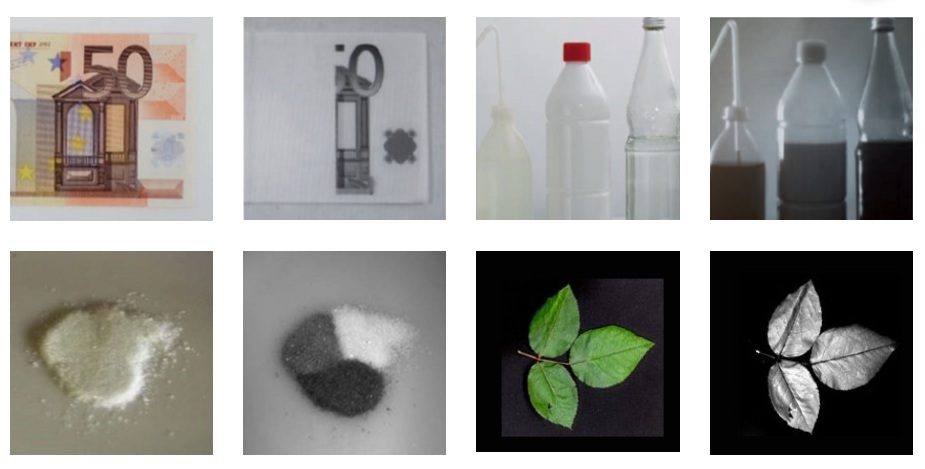

Just to whet the appetite, consider the 4 sets of image pairs below. In each case, the leftmost image was captured with visible wavelengths, while the righthand image utilized SWIR portions of the spectrum. These pairs were chosen to highlight the compelling power of SWIR to identify features that are not apparent in the visible portion of the spectrum.

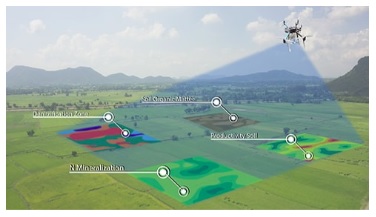

For certain applications, one wouldn’t need the human-visible images, of course, as with machine vision the whole point is to automate the image processing and corresponding actions. So for counterfeit banknote detection, bottle fill level monitoring, materials identification, or crop monitoring, one might just design for the SWIR portion of the spectrum and ignore the VIS.

But some applications might benefit from both the VIS and the SWIR images. For example, the vein imaging application might require a VIS reference image as well as a SWIR-specific image, for patient education and/or medical records.

For the crop monitoring application above, the VIS spectrum clearly orients trees, hills, buildings, and roadways. Meanwhile pseudo-color-mapping shows the varied moisture levels as sensed in the SWIR portion of the spectrum.

The range of potential applications combining VIS and SWIR is staggering. One can improved on one’s own or a competitor’s previous application. Or innovate something altogether new.

Sensors that register both VIS and SWIR wavelengths

Sony’s IMX992 and IMX993 sensors utilize Sony’s SenSWIR technology, such that a single sensor and camera may be deployed across the combined VIS and SWIR portions of the spectrum. Without such sensors, a VIS SWIR solution would require at least two separate cameras – one each for VIS and SWIR, respectively. That would add unnecessary expense, takes up more space, and require camera and image synchronization.

Now there are cameras, such as several in Allied Vision’s Alvium series, in which Sony’s SenSWIR sensors are embedded. With several interface options, including mipi, USB3 Vision, and 5GigE Vision:

Lens manufacturers doing their part

One of the beauties of the free-market system, together with agreements on standards for interfaces and lens mounts, is that each innovator and manufacturer can focus on what he does best. Sensor manufacturers bring out new sensors. Camera designers embed those sensors and provide programming controls, communications interfaces, and lens mounts. And optics professionals design and produce lenses. The benefits from a range of choices, performance options, and price points.

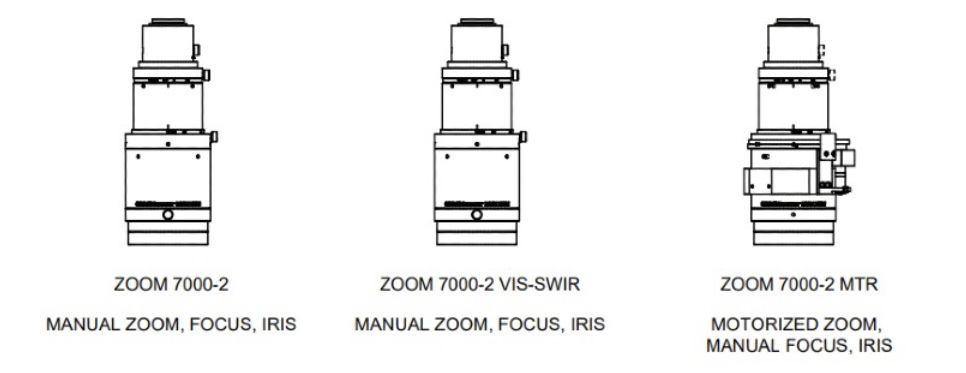

Navitar VIS-SWIR lenses

Navitar’s ZOOM 7000-2 macro lens imaging system delivers superb optical performance and image quality for visible and SWIR imaging. Their robust design ensures reliability even in harsh environments. ZOOM 7000-2 macro lenses are ideal for applications, such as machine vision, scientific and medical imaging applications.

In fact there are three models in the series:

Kowa FC24M multispectral lenses

Kowa’s FC24M C-mount lens series are manufactured with wide-band multi-coating. That minimizes flare and ghosting from VIS through NIR. These lenses are also compelling for a number of other reasons, including wide working range (as close as 15 cm MOD), durable construction, and a unique close distance aberration compensation mechanism.

That “floating feature” creates stable optical performance at various working distances. Internal lens groups move independently of each other, which optimizes alignment compared to traditional lens design.

Tamron Wide-band SWIR lenses

Other lensing options include Tamron’s Wide-band SWIR lenses. While the name says SWIR, in fact they are VIS-SWIR. Designed for compatibility with Sony’s IMX990 and IMX991 SenSWIR sensors, you have even more lens choices. Call us at 978-474-0044 if you’d like us to help you navigate to best-fit components in cameras, lensing, and lighting, for your particular application.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.