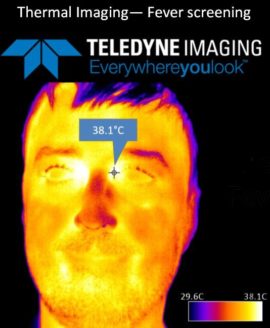

Long Wave Infra Red (LWIR) cameras have been used for industrial applications to detect infrared light in the 8-14um wavelength region. This infrared light is invisible radiant energy that we experience as heat but can not see. Applications for LWIR cameras continues to expand past industrial applications now entering into medical markets such as fever screening.

Teledyne Dalsa has expanded the Calibir LWIR camera series introducing the latest Calibir GXM model now with radiometric capabilities. With outbreaks of infectious diseases such as Covid 19, LWIR cameras can be used for fever screening by detecting elevated skin temperatures. Using optics, the cameras provide the ability to take the temperature of individuals keeping save distances between patients and medical practitioners.

Click HERE for a quote on the Calibir GXM LWIR camera

All cameras are factory-calibrated for reliable radiometric performance, have outstanding dynamic range and allow the best possible NETD over a vast range of temperature (>600C). . Coupled with many features such as multiple ROI, color maps, LUTs and the ability to sync and trigger multiple cameras, makes the Calbir GXM a good solution for many thermal imaging applications.

Full specifications on Teledyne Dalsa LWIR cameras can be found HERE

Need a full turnkey “Fever Detection System”? Contact our partners at Integro Technology. Click here to learn more

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera selection. With a large portfolio of lenses, cables, NIC card and industrial computers, we can provide a full vision solution!

Previous related blogs:

Learn about Thermal Imaging – Problems solved with Dalsa’s new LWIR Calibir camera!