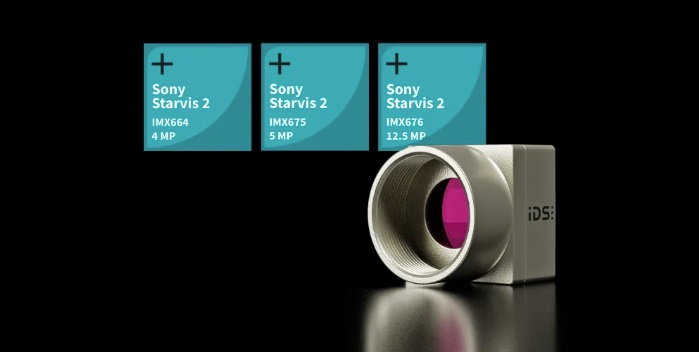

Sony has evolved their successful STARVIS high-sensitivity back-illuminated sensor to the next generation STARVIS 2 sensors. This brings even wider dynamic range, and is available in three specific resolutions of 4MP, 5MP, and 12.5MP. The sensor models are respectively Sony IMX664, IMX675, and IMX676. And IDS Imaging has in turn put these sensors into their uEye cameras.

Camera overview before deeper dive on the sensors

The new sensors, responsive in low ambient light to both visible and NIR, are available in IDS’ compact, cost-effective uEye XCP and uEye XLS cameras. They’re available in both the XCP housed cameras with C-mount optics and USB3 interface. And in the XLS board-level format with C/CS, S, and no-mount options, also with the USB3 interface

Choose the XCP models if you want the closed zinc die-cast housing, the screwable USB micro-B connector, and the C-mount lens adaptor for use with a wide range of multi-megapixel lenses. Digital I/O connections plus trigger and flash pins may also be connected.

If you prefer a board-level camera for embedded designs, and even lower weight (from 3 – 20 grams) select one of the XLS formats. Options include C/CS and S-mount, or no-mount.

All models across both camera families are Vision Standard compliant: U3V / GenICam. So you may use the IDS Peak SDK. Or any other compliant software.

Deeper dive on the sensors themselves

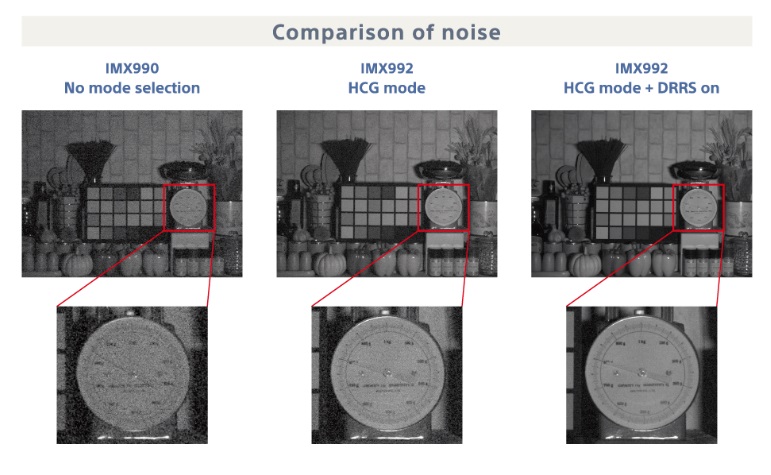

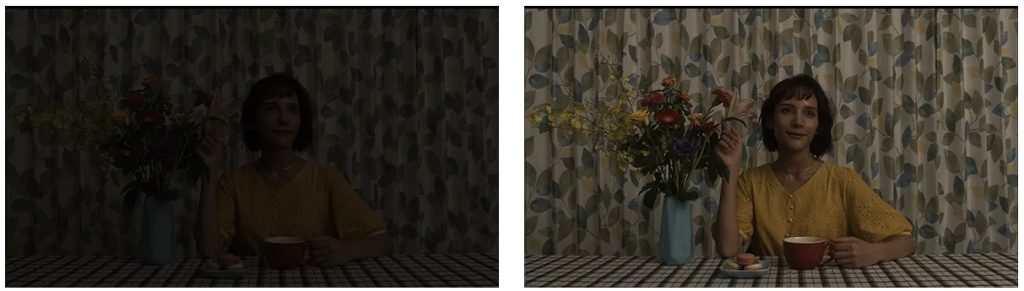

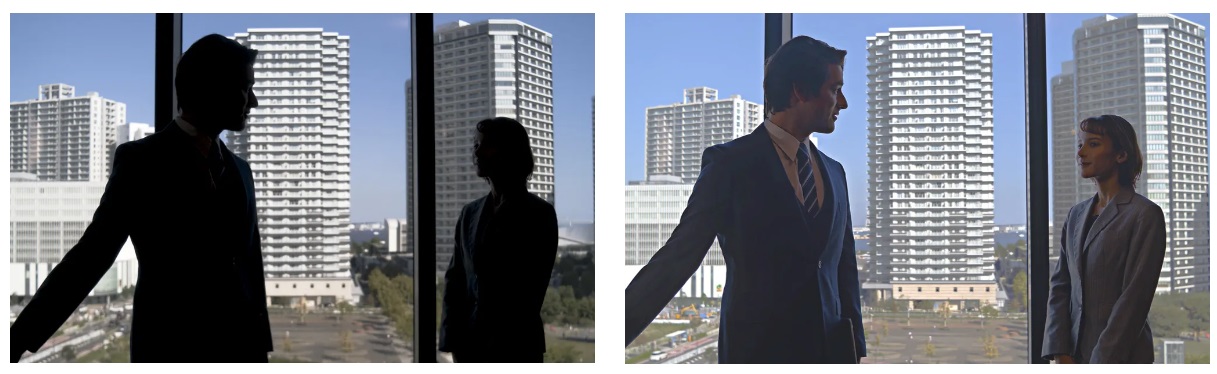

To motivate the technical discussion, let’s start with side-by-side images, only one of which was obtained with a STARVIS 2 sensor:

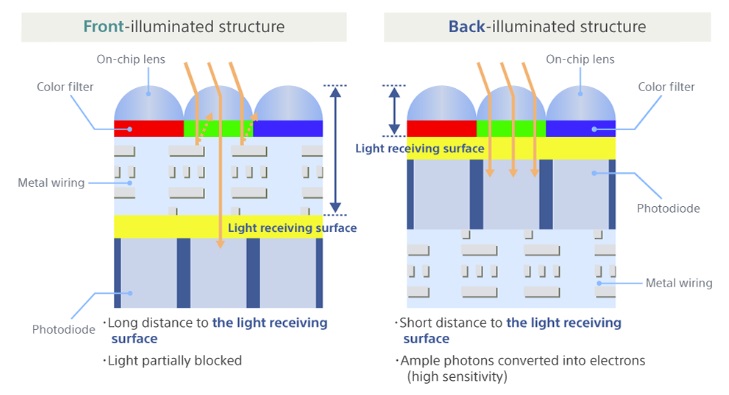

How is such a dramatic improvement possible, over Sony’s earlier sensors? The key is switching from traditional front-illuminated sensors to STARVIS’ back-illuminated design. The back-illuminated approach collects more incident light – by a factor of 4.6 times – by positioning the photo diodes on top of the wiring layer.

See also a compelling 4 minute video showing images and streaming segments generated with and without STARVIS 2 sensors.

NIR as well as VIS sensitivity

The STARVIS 2 sensors are capable of not only conventional visible spectrum performance (VIS), but also do well in the NIR space. If the subject’s NIR sensitivity is sufficient, one may avoid or reduce the need for supplemental NIR lighting. This is useful for license plate recognition applications, security, or other uses where lighting in certain spectra or intensities would disturb humans.

Performance and feature highlights

The 4 MP Sony IMX664 delivers up to 48.0 fps, at 2688 x 1536 pixels, with USB3 delivering 5 Gbps. It pairs with lenses matched for up to 1/1.8″.

Sony’s IMX675, with 2592 x 1960 pixels, provides 5 MP at frame rates to 40.0 fps, via the same USB3 interface.

Finally, the 12.62 MP Sony IMX676,is ideal for microscopy with square format 3552 x 3552, but can still deliver up to 17.0 fps for applications with limited motion.

While there are diverse sensor features to explore in the data sheets for both the uEye XCP and uEye XLS cameras, one particularly worth noting is the High Dynamic Range (HDR) feature. These feature controls are made available in the camera, permitting bright scene segments to experience short exposures, while darker segments get longer exposure. This yields a more actionable dynamic range for your application to process.

Direct links to the cameras

In the table below one finds each camera by model number, family, and sensor, with link to respective landing page for full details, spec sheets, etc.

| Model | Family | Sensor |

| U3-34E0XCP | uEye XCP housed | SONY IMX664 |

| U3-34F0XCP | uEye XCP housed | SONY IMX675 |

| U3-34L0XCP | uEye XCP housed | SONY IMX676 |

| U3-34E1XLS | uEye XLS board | SONY IMX664 |

| U3-34F1XLS | uEye XLS board | SONY IMX675 |

| U3-34L2XLS | uEye XLS board | SONY IMX676 |

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.