Effective machine vision outcomes depend upon getting a good image. A well-chosen sensor and camera are a good start. So is a suitable lens. Just as important is lighting, since one needs photons coming from the object being imaged to pass through the lens and generate charges in the sensor, in order to create the digital image one can then process in software. Elsewhere we cover the full range of components to consider, but here we’ll focus on lighting.

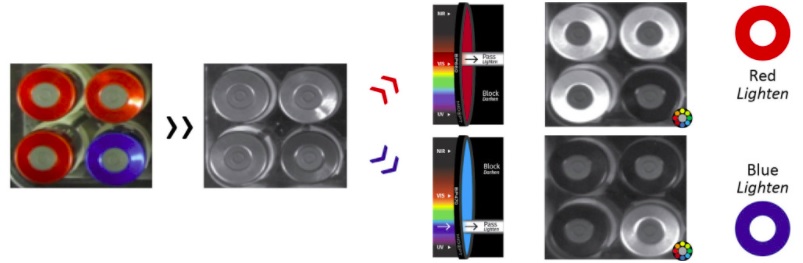

While some applications are sufficiently well-lit without augmentation, many machine vision solutions are only achieved by using lighting matched to the sensor, lens, and object being imaged. This may be white light – which comes in various “temperatures”; but may also be red, blue, ultra-violet (UV), infra-red (IR), or hyper-spectral, for example.

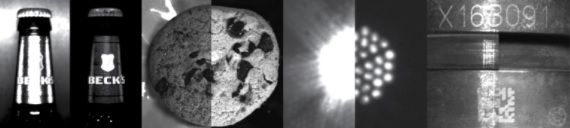

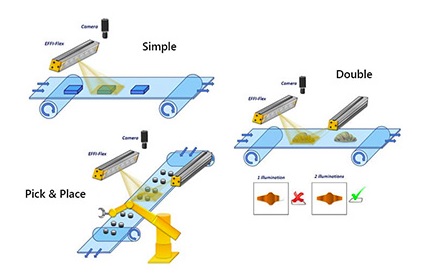

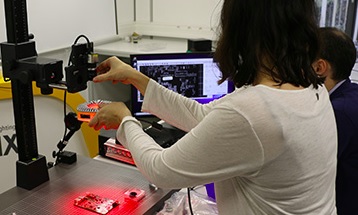

LED bar lights are a particularly common choice, able to provide bright field or dark field illumination, according to how they are deployed. The illustrations below show several different scenarios.

LED light bars conventionally had to be factory assembled for specific customer requirements, and could not be re-configured in the field. The EFFI-Flex LED bar breaks free from many of those constraints. Available in various lengths, many features can be field-adapted by the user, including, for example:

- Color of light emitted

- Emitting angle

- Optional polarizer

- Built-in controller – continuous vs. strobed option

- Diffuser window opacity: Transparent, Semi-diffusive, Opaline

While the EFFI-Flex offers maximum configurability, sister products like the EFFI-Flex-CPT and EFFI-Flex-IP69K offer IP67 and IP69 protection, respectively, ideal for environments requiring more ruggedized or washdown components.

Do you have an application you need tested with lights? Contact us and we can get your parts in the lab, test them and send images back. If your materials can’t be shipped because they are spoilable foodstuffs, hazmat items, or such, contact us anyway and we’ll figure out how to source the items or bring lights to your facility.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of lenses, cables, NIC card and industrial computers, we can provide a full vision solution!