Machine vision systems are widely accepted for their value-add in so many fields. Whether in manufacturing, pick-and-place, quality control, medicine, or other areas, vision systems either save money and/or create competitive advantage.

BUT deploying new systems can be tricky

For experienced machine vision system builders, sometimes the next new system is either “simple” in complexity, or a minor variant on previously built complex systems in which one has good confidence. Deployment, systems acceptance testing and validation may be straightforward, with little doubt about correctness or reliability. Congratulations if that’s the case.

For new undertakings, even for experienced vision system builders, there’s often some uncertainty in the “black box” nature of a new system. Can I trust the results as accurate? Am I double counting? Am I missing any frames? If the system overloads, how can I track which inspections were skipped while the system recovers? How to troubleshoot and debug? And rising QA standards are pushing us to verify performance – yikes!

T2IR: Trigger to Image Reliability can open up the black box

Take the mystery out of system deployment, tuning, and commissioning. T2IR’s powerful capabilities essentially open up the black box. And for users of many Teledyne DALSA cameras and framegrabber products, T2IR is free!

Bundled with many Teledyne DALSA products

The T2IR features are available at no cost for customers who purchase (or already own) any of:

Cameras: Genie Nano, Linea, Piranha, and others

Frame Grabbers: Xtium and Xcelera

Software: Sapera LT SDK (for both Windows and Linux)

T2IR in a nutshell

T2IR, Trigger to Image Reliability, is a set of hardware and software features that help improve system reliability. It’s offered as a free benefit to Teledyne DALSA customers for many of their cameras and framegrabbers. It helps you get inside your system to audit and debug image flow.

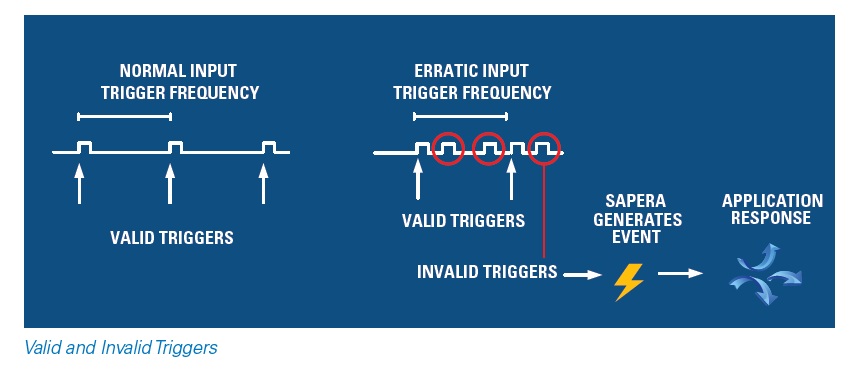

Example One: Coping with unexpected triggers

Consider the following diagram:

To the left of the illustration we see the expected normal trigger frequency. Each trigger, perhaps from a mechanical event and an actuator sending an electronic pulse, triggers the next camera image, readout, and processing, at intervals known to be sufficient for complete processing. No lost or partial images. No overtriggering. All as expected.

But in the middle of the illustration we see invalid triggers interspersed with valid ones. What would happen in your system if you didn’t plan for this possibility? Lost or partial images? Risk of shipping bad product to customers due to missed inspections? Damage to your system? What’s the source of the extra triggers? How to debug the problem if found during design and commissioning? How to recover if system is already in production?

With T2IR, the Sapera API, may be programmed to detect the invalid triggers that are out of the expected timing intervals. An event can be generated for the application to manage. The application might be programmed to do something like “stop the system”, “tag the suspect images”, “note the timestamps”, or otherwise make a controlled recovery.

Key takeway: instead of the system just blundering along with the invalid triggers undetected, with possible grave consequences, T2IR can help create a more robust system that recovers gracefully.

Example Two: Tracking and Tracing Images – Tagging with timestamps

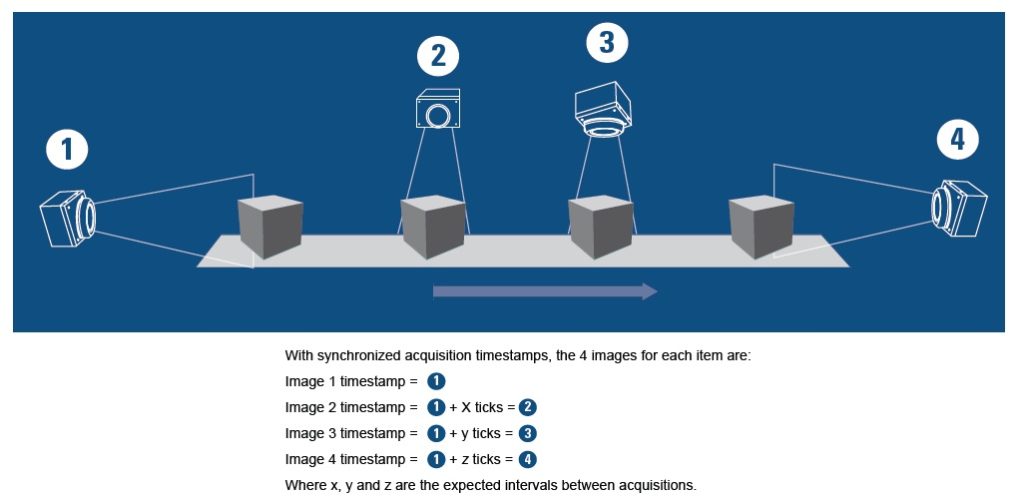

Consider the challenges of high-speed materials handling, let’s say 3000 parts per minute. Some such systems require multiple cameras, lets say 4 per object, to make an accept, reject, or re-inspect decision on each component. For this hypothetical system, that would be 12,000 images per minute to coordinate!

The illustration above shows the timestamps one might assign with T2IR, based on ticks in microseconds, according to known or believed belt motion speed. But what if results seem corrupted using that method, or if there are doubts about whether speed is constant or variable?

With the Xtium frame grabber, one can use T2IR to choose among alternate timestamp bases beside the “hoped for” microsecond calculations:

So one might prefer to tag the images with and external trigger or a shaft encoder, which are physically coupled to the system’s movements, and hence more precise than calculated assumptions.

Sounds promising, how to learn more about T2IR?

Pursue any/all of the following:

Detailed primer: T2IR Whitepaper

Read further in this blog: Additional high-level illustrations and another example below

Speak with 1stVision: Sales engineers happy to speak with you – call 978-474-0044.

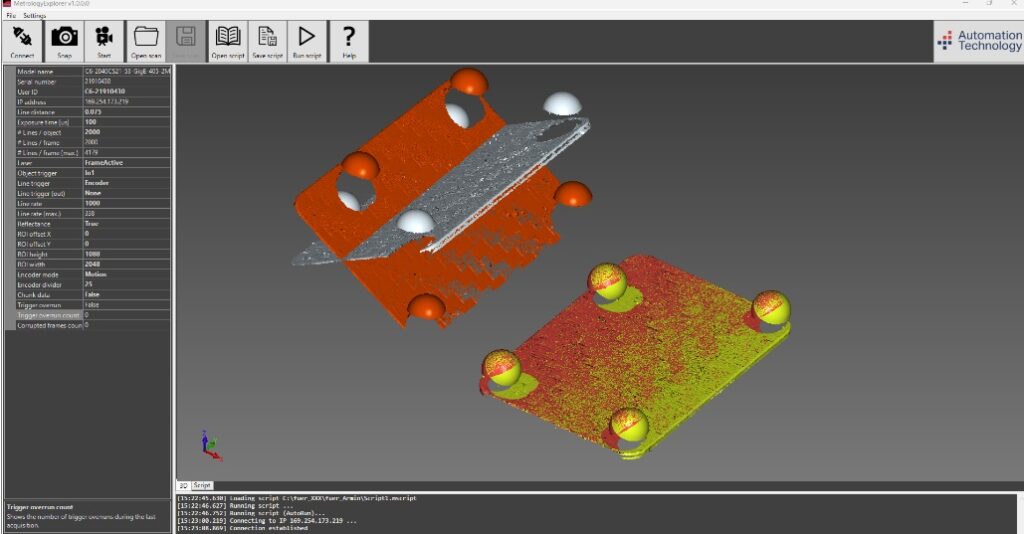

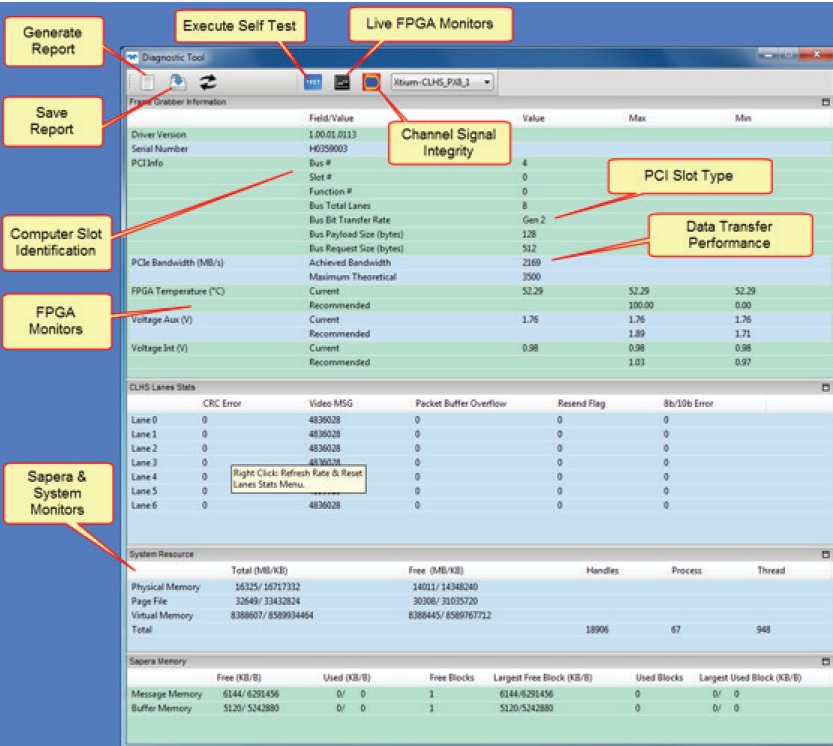

Diagnostic tool: a brief overview

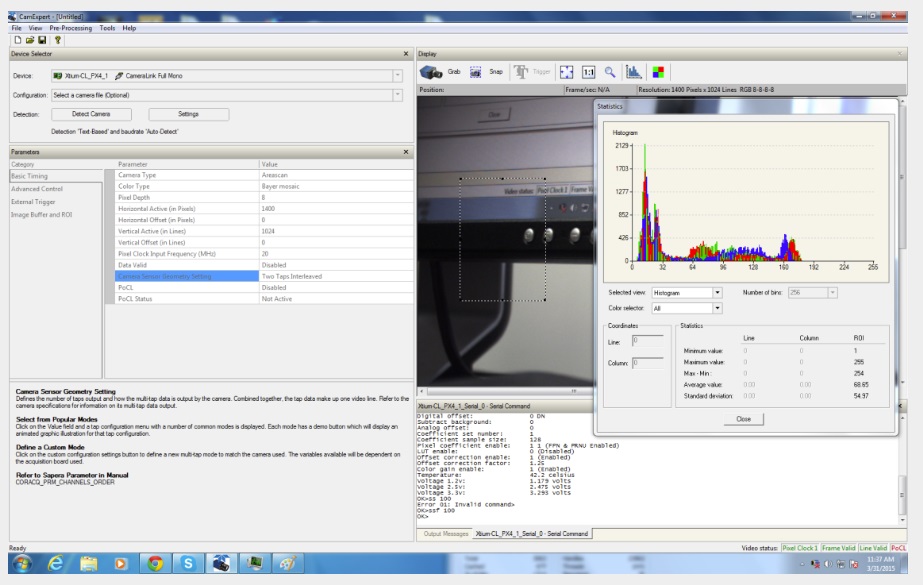

Consider the following screenshot, in the context of an Xtium framegrabber together with T2IR:

The point of the above annotated screenshot is to give a sense of the level of detail provided even at this top level window – with drill ins to inspect an control diverse parameters, to generate reports, etc. This is “just” a teaser blog meant to whet the appetite – read the tutorial, speak with us, or see the manual, for a deeper dive.

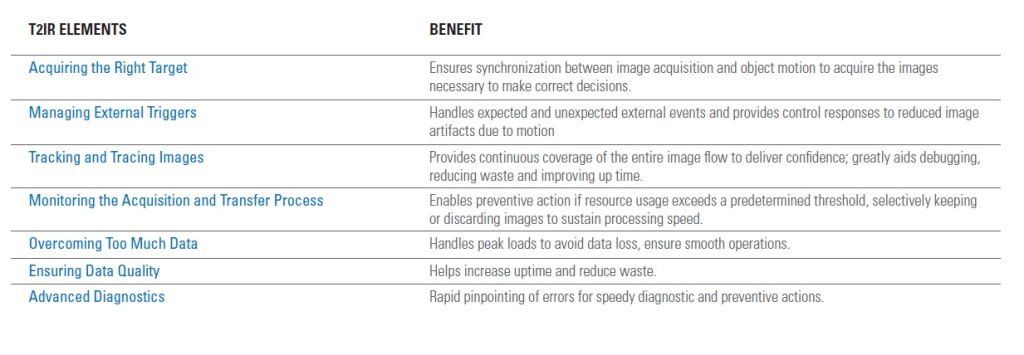

T2IR Elements and Benefits – in more detail

Conclusion

Every major machine vision camera and software provider offers an SDK and at least some configuration and control features. But Trigger to Image Reliability – T2IR – takes traceability and control to another level. And for users of so many Teledyne DALSA cameras, framegrabbers, and software, it’s bundled in at no cost!

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.