Newer is better, right? Well yes if by better one wants the very highest performance. More below on that. But the predecessor generations are performant in their own right, and remain cost-effective and appropriate for many applications. We’re often get the question “What’s the difference?” – in this piece we summarize key differences among the 4 generations of SONY Pregius sensors.

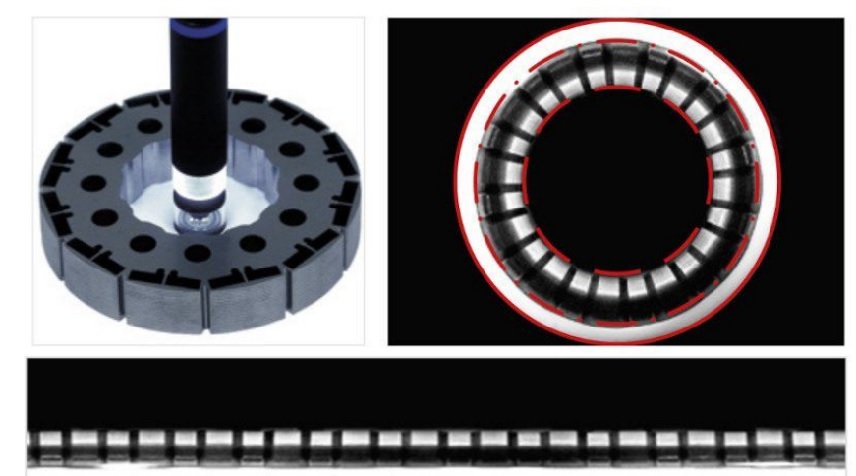

In machine vision, sensors matter. Duh. As do lenses. And lighting. It’s all about creating contrast. And reducing noise. Each term linked above takes you to supporting pieces on those respective topics.

This piece is about the four generations of the SONY Pregius sensor. Why feature a particular sensor manufacturer’s products? Yes, there are other fine sensors on the market, and we write about those sometimes too. But SONY Pregius enjoys particularly wide adoption across a range of camera manufacturers. They’ve chosen to embed Pregius sensors in their cameras for a reason. Or a number of reasons really. Read on for details.

Machine Vision cameras continue to reap the benefits of the latest CMOS image sensor technology since Sony announced the discontinuation of CCD’s. We have been testing and comparing various sensors over the years and frequently recommend Sony Pregius sensors when dynamic range and sensitivity is needed.

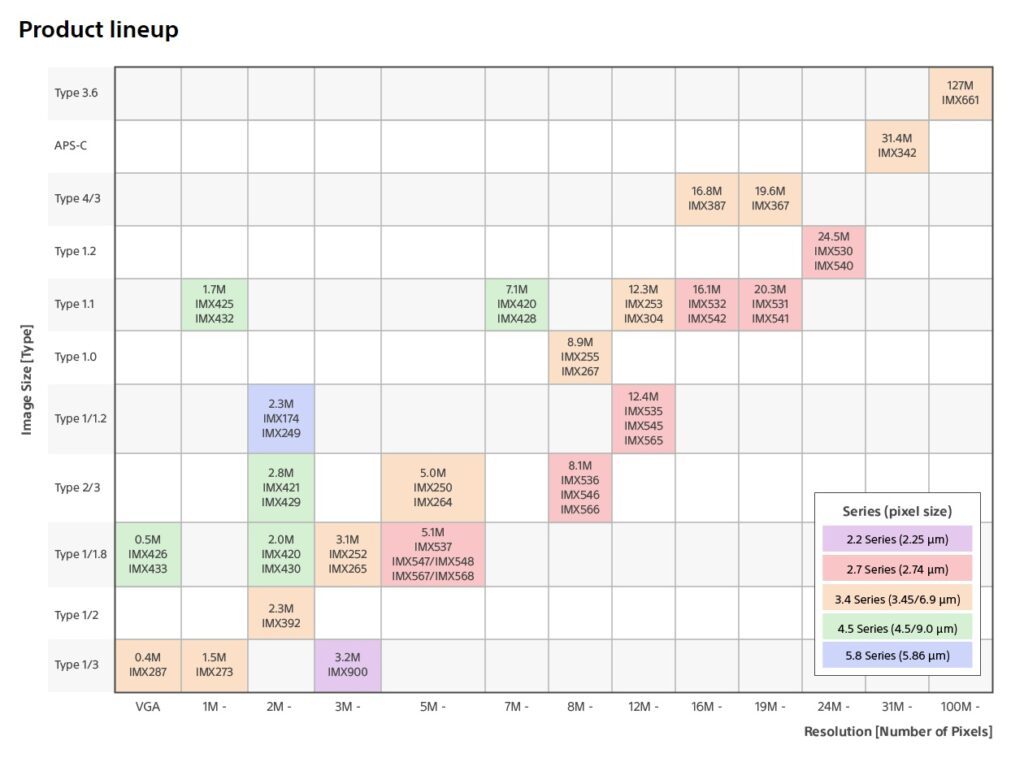

If you follow sensor evolution, even passively, you have probably also seen a ton of new image sensor names within the “Generations”. But most users make a design-in sensor and camera choice, and then live happily with that choice for a few years. As we do when choosing a car, a TV, or a laptop. So unless you are constantly monitoring the sensor release pipeline, its hard to keep track of all of Sony’s part numbers. We will try to give you some insight into the progression of Sony’s Pregius image sensors used in industrial machine vision cameras.

How can I tell if it’s a Sony Pregius sensor?

Sony has prefixes of the image sensors which make it easy to identify the sensor family. All Sony Pregius sensors have a prefix of “IMX.” Example: IMX174 – which today is one of the best sensors for dynamic range..

What are the differences in the “Generations” of Sony Pregius Image sensors?

Sony Pregius Generation 1:

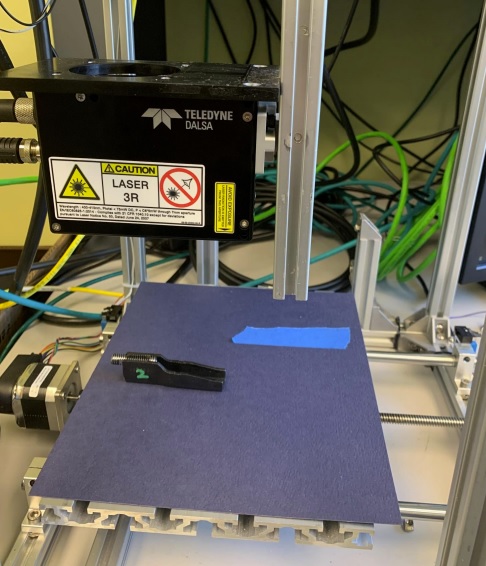

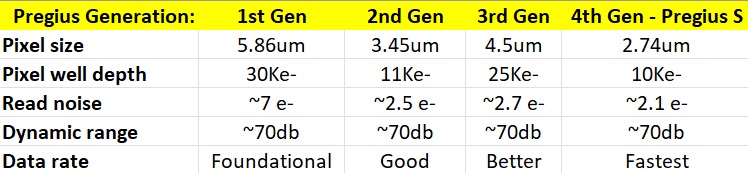

Primarily consisted of a 2.4MP resolution sensor with 5.86um pixels BUT had a well depth (saturation capacity) of 30Ke- and still unique in this regard within the generations. Sony also brought the new generations to the market with “slow” and “fast” versions of the sensors at two different price points. In this case, the IMX174 and IMX249 were incorporated into industrial machine vision cameras providing two levels of performance. Example being Dalsa Nano M1940 (52 fps) using IMX174 vs Dalsa Nano M1920 (39 fps) using IMX249, but the IMX249 is 40% less in price.

Sony Pregius Generation 2:

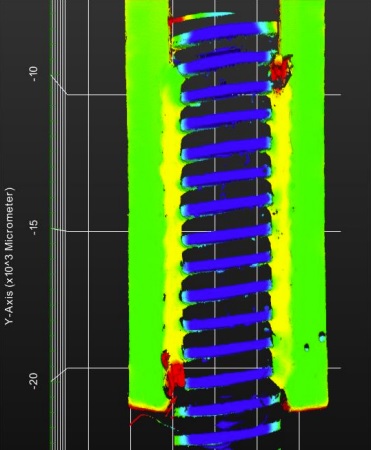

Sony’s main goal with Gen 2 was to expand the portfolio of Pregius sensors which consists of VGA to 12 MP image sensors. However, the pixel size decreased to 3.45um along with well depth to ~ 10Ke-, but noise also decreased! The smaller pixels allowed smaller format lenses to be used saving overall system cost. However this became more taxing on lens resolution being able to resolve the 3.45um pixels. In general it offered a great family of image sensors and in turn an abundance of machine vision industrial cameras at lower cost than CCD’s with better performance.

Sony Pregius Generation 3:

For Gen 3, Sony took the best of both the Gen 1 and Gen 2. The pixel size increased to 4.5um increasing the well depth to 25Ke-! This generation has fast data rates, excellent dynamic range and low noise. The family will ranges from from VGA to 7.1MP. Gen 3 sensors started appearing in our machine vision camera lineup in 2018 and continued to be designed in to cameras for the last few years.

Sony Pregius Generation 4:

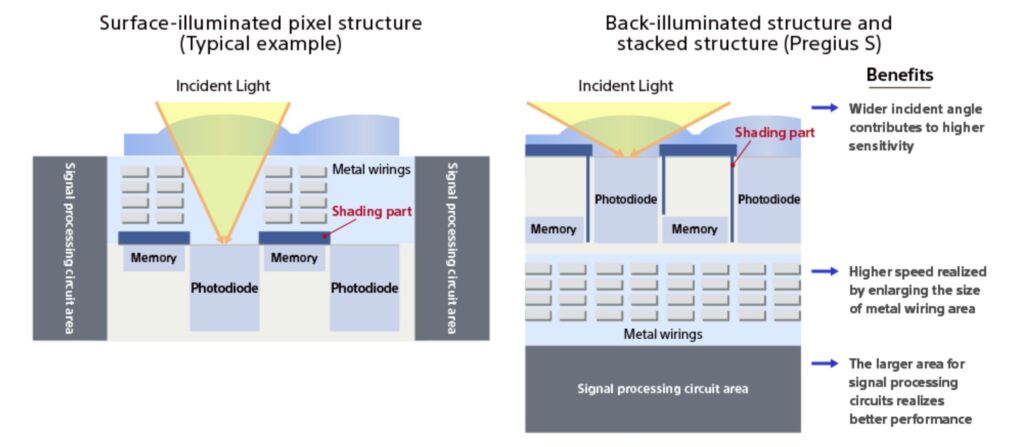

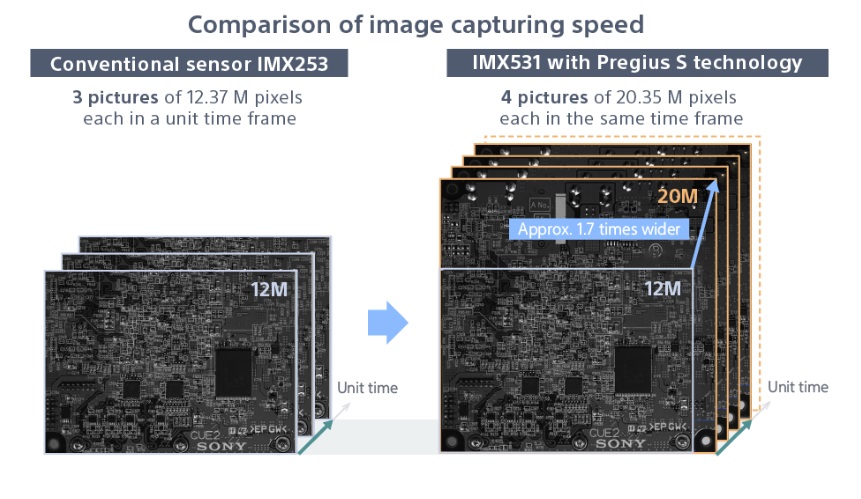

The 4th generation is denoted Pregius S, and is designed in to a range of cameras from 5 through 25 Megapixels. Like the prior generations, Pregius S provide global shutter for active pixel CMOS sensors using Sony Semiconductor’s low-noise structure.

New with Pregius S is a back-illuminated structure – this enables smaller sensor size as well as faster frame rates. The benefits of faster frame rates are self-evident. But why is smaller sensor size so important? If two sensors, with the same pixel count, and equivalent sensitivity, are different in size, the smaller one may be able to use a smaller lens – reducing overall system cost.

Pregius S benefits:

With each Pregius S photodiode closer to the micro-lens, a wider incident angle is created. This admits more light. Which enhances sensitivity. At low incident angles, the Pregius S captures up to 4x as much light as Sony’s own highly-praised 2nd generation Pregius from just a few years ago!

With pixels only 2.74um square, one can achieve high resolution even is small cube-size cameras, continuing the evolution of more capacity and performance in less space.

Fun fact: The “S” in Pregius S is for stacked, the layered architecture of the sensor with the photodiode on top and circuits below, which as note has performance benefits. It’s such an innovation – despite already high-performing Gens 1, 2, and 3, that Sony graced Gen 4 as the Pregius S to really call out the benefits.

Summary

While Pregius S sensors are very compelling, the prior generation Pregius sensors remain and excellent choice for many applications. It comes down to performance requirements and cost, to achieve the optimal solution for any given application.

Many Pregius sensors, including Pregius S, can be found in industrial cameras offered by 1stVision. Use our camera selector to find Pregious sensors, any staring with “IMX”. For Pregius S in particular, supplement that prefix with a “5”, i.e. “IMX5”, to find Pregious S sensor like IMX540, IMX541, …, IMX548.

Sony Pregius image sensor Comparison Chart

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!