This is a question we get asked frequently: “Why should we pay $200 plus for your board level machine vision camera when we can just get a webcam for $69?”

A great question and maybe you can, but what ARE the differences?

Basically, there are just a few questions you need to answer to see if you should use a webcam for you machine vision application which are as follows:

- Do you need to program to integrate the video into an application with processing or control?

- Do you need consistent image quality?

- Are you doing computer vision (the computer is making decisions based on the images) or are you just viewing the images visually?

- Do you care if the camera specifications change over your product’s life cycle?

- Is the object under inspection moving?

- Do you need to control when you take the picture or interface to a trigger or strobe?

- Do you need to be able to choose what lens you will need?

If the answer to any of the above are YES, then a webcam will NOT work well or at all for your application. If the answers are NO, then by all means, you might be able to save money and just use a low-cost webcam. (You can stop reading here if you want, or continue for more details below).

Machine Vision Camera Software

Webcams do NOT come with a SDK as they are made to show video only. They normally provide a universal video driver, and also an application for viewing video.

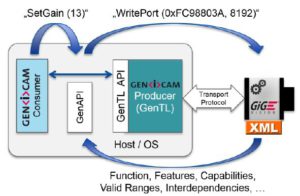

Industrial machine cameras come with a SDK programmable in C/C++/C#/etc. It allows you to programmatically control the camera for both data acquisition and control of the camera’s parameters. (Example HERE to show extensive support of various operating systems and download)

Moving objects

Webcams have rolling shutter sensors which mean they cannot acquire images of moving objects without ‘smearing’ them. Industrial machine vision cameras use sensors with global shutters providing the ability to freeze the image to produce non smeared images of moving objects.

Trigger and Strobe Control

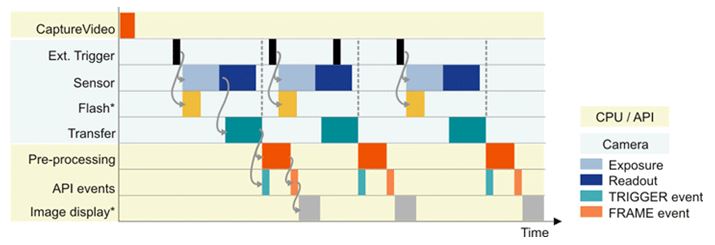

Webcams only have an interface to the USB data, whereas industrial machine vision cameras have hardware and software inputs and outputs. These allow for exact timing for a trigger to take a picture and a strobe to illuminate the object.

Camera Specs Changing over time

Webcams just need to show you video! In turn the manufacturers are not concerned if the sensors inside the camera change every six months. Whether the sensitivity changes by 10% makes no difference when you are just video conferencing with Grandma.

Industrial machine vision cameras are made with image sensors that don’t go obsolete every 6 months, but rather companies hope for 10 year life spans. It makes a huge difference if you are doing a computer vision algorithm that you have 5 man years of software development and the sensor’s sensitivity changes by even 1%.

Furthermore, the form factor of webcams change frequently as well. This doesn’t make a difference when it is just on your desk. It makes a huge difference when your camera and lens is fixtured in a machine that has 500 hours of CAD work to design, much less build. Moving the camera and lens 10cm might not be possible!

Do you need to choose your lens?

Webcams come with an integrated lens that is suitable for general viewing, and this lens is integrated with the camera and not changeable. Industrial machine vision cameras come with no lenses as not only do lenses come in a variety of focal lengths for different magnification, but also lenses coming in a variety of resolutions. Choosing a lens requires you to know the size of the sensor, your working distance, your field of view, and the pixel size. (See related educational blogs on lenses at end of this post)

What are your options for a low cost camera solution?

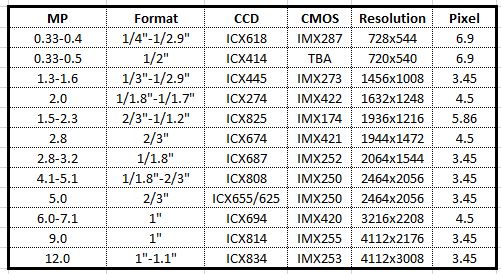

If you need industrial machine vision camera solutions with a solid SDK, long life cycles, at a low price, there several solutions to consider. Rolling shutter imagers are always lower price which are always a place to start along with USB2 interfaces. Read our previous blog HERE which outlines some specific models which are low cost. There is also a great new platform coming providing 5 Megapixel resolution with a rolling shutter imager, but with great performance for $280! Contact us for more details.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera selection. With a large portfolio of lenses, cables, NIC card and industrial computers, we can provide a full vision solution!

Ph: 978-474-0044 / info@1stvision.com / www.1stvision.com

Related Posts