Recently we announced Allied Vision’s 5GigE SWIR Goldeye Pro cameras. Pretty cool. Pun intended. Click that link above for the 10,000 foot view of the camera series, features, and value proposition overall.

SWIR cameras come in both cooled and uncooled models

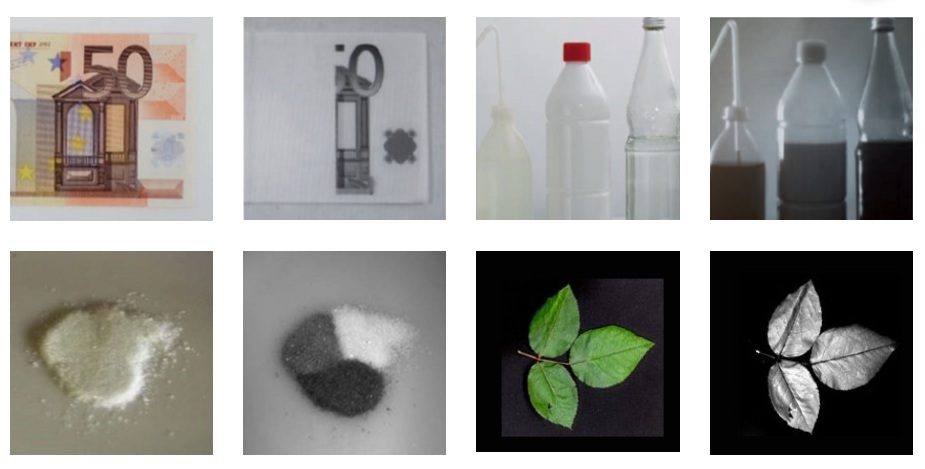

So you are already doing Short-Wave-InfraRed imaging, or think you might want to. For all the reasons and applications discuss in our SWIR cameras and applications knowledge-base article.

Cooled vs uncooled performance – what are the differences?

As with many engineering design choices and product selection options, for a given application one needs components that are good enough – perhaps with a little margin – to get the job done. But not overdesigned – as that would add cost, weight, and volume delivering no measurable benefit.

Thermoelectric cooling (TEC)

The InGaAs (indium gallium arsenide) sensors used for SWIR imaging deliver the best images when temperature-stabilized. That’s provided by the thermoelectric cooling (TEC). That helps reduce dark noise and thermal current.

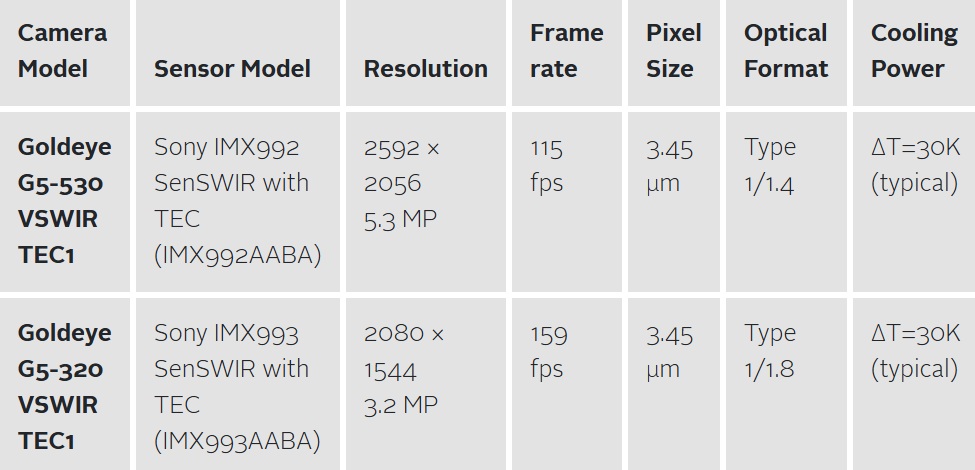

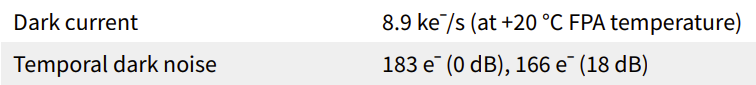

With TEC, see key performance metrics

For the Goldeye Pro G5-320, we snapshot key metrics from the datasheet:

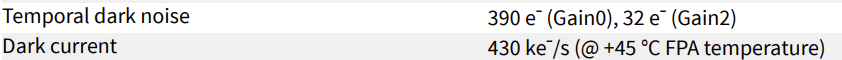

Compare to uncooled Goldeye G-033 metrics:

Sensor performance comparison summary

For background on sensor performance testing, review our tutorial on EMVA 1288 attributes and standards. There we go into the meaning of key terms like dark noise, dark current, saturation capacity, and dynamic range.

While the G-033 and G5-320 sensors above are different in size and release date, they are both InGaAs sensors, so share essential basic characteristics. And we can be sure that Allied Vision engineered each camera for the best possible performance relative to housing design, electronics positioning, and so forth.

| Camera | G5-320 | G-033 |

| Temporal dark noise | 183 e~ | 390 e~ |

| Dark Current | 8.9 ke~/s | 430 ke~/s |

What performance requirements does your SWIR application require?

We love to learn about client applications, and to guide you to best-fit selections for sensors, cameras, lenses, lighting, and software. Whether you are new to SWIR or experienced, let us help!

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.