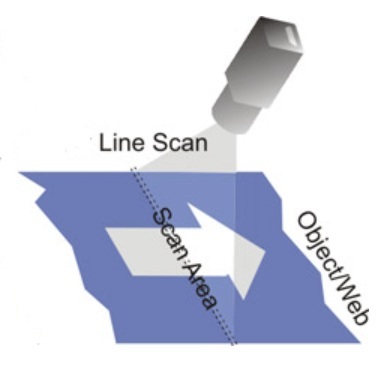

Unless one calculates and sets the line rate correctly, there’s a risk of blur and sub-optimal performance. And/or purchasing a line scan camera that’s not up to the task; or that’s overkill and costs you more than would have been needed.

Optional line scan review or introduction

Skip to the next section if you know line scan concepts already. Otherwise…

Perhaps you know about area scan imaging, where a 2D image is generated with a global shutter, exposing all pixels on a 2D sensor concurrently. And you’d like to understand line scan imaging by way of comparing it to area scan. See our blog What is the difference between an Area Scan and a Line Scan Camera?

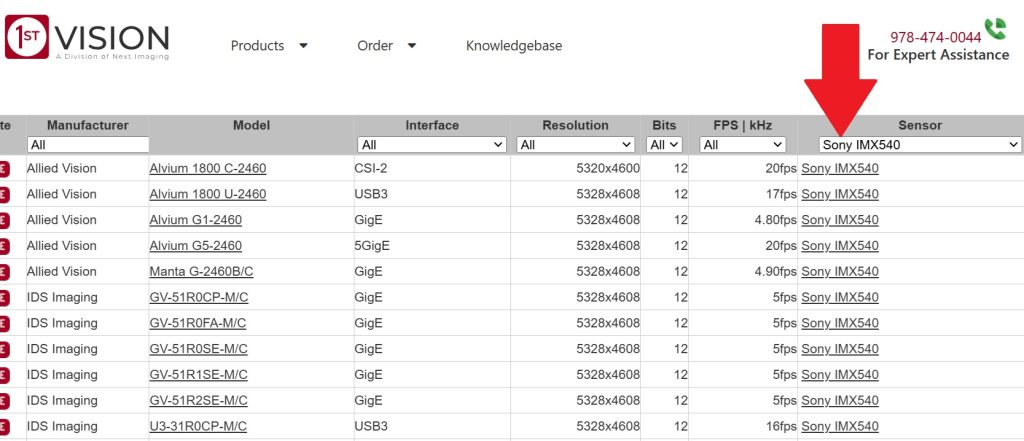

Maybe you prefer seeing a specific high-end product overview and application suggestions, such as the Teledyne DALSA 16k TDI line scan camera with 1MHz line rate. Or a view to tens of different line scan models, varying not only by manufacturer, but by sensor size and resolution, interface, and whether monochrome or color.

Either you recall how to determine resolution requirements in terms of pixel size relative to defect size, or you’ve chased the link in this sentence for a tutorial. So we’ll keep this blog as simple as possible, dealing with line rate calculation only.

Calculate the line rate

Getting the line rate right is the application of the Goldilocks principle to line scanning.

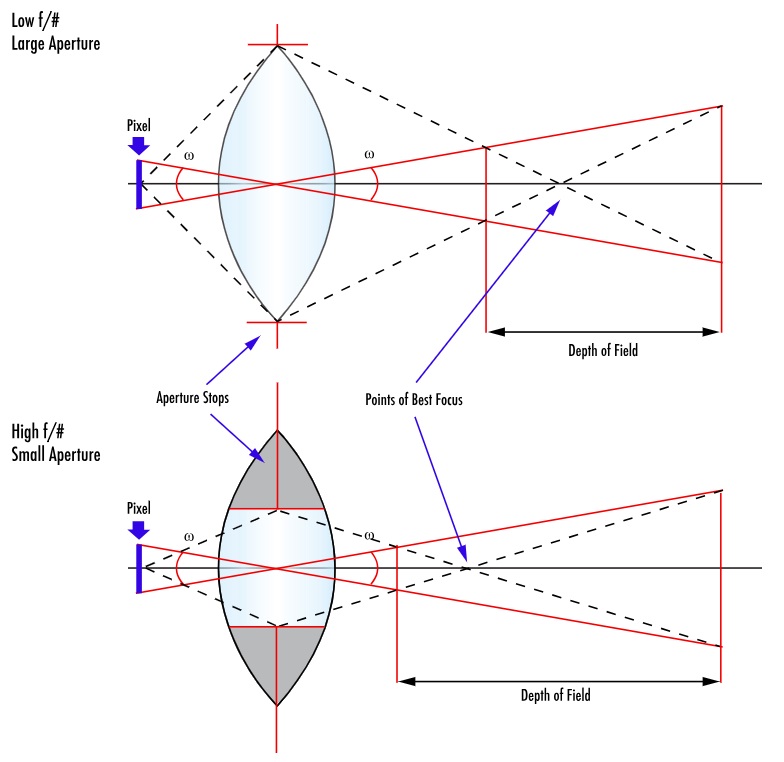

| Line rate too slow… | Line rate too fast… |

| Blurred image if due to too long exposure, and/or missed segments due to skipped “slices” | Oversampling can create confusion by identifying the same feature as two distinct features |

A rotary encoder is typically used to synchronize the motion of the conveyor or web with the line scan camera (and lighting if pulsed). Naturally the system cannot be operated faster than the maximum line speed, but it may sometimes operator more slowly. This may happen during ramp up or slow down phases – when one may still need to obtain imaging – or by operator choice to conserve energy or avoid stressing mechanical systems.

Naming the variables … with example values

Resolution A = object space correlation to sensor; FOV / pixel array; e.g. if 550mm FOV and 2k sensor = 550/2000 = 0.275 pixels per mm

Transport speed T = mm per sec; e.g. 4k / 1mm yields rate of motion

Sampling frequency F = T / A; for example values above F = 4000 / 0.275 = 14545.4545 = 14.5kHz; spelled out: Frequency = Transport_speed / Pixel_spatial_resolution (what 1 pixel equals in target space)

For the example figures used above, a line scan camera with 2k resolution and a line scan frequency of about 14.5 kHz will be sufficient.

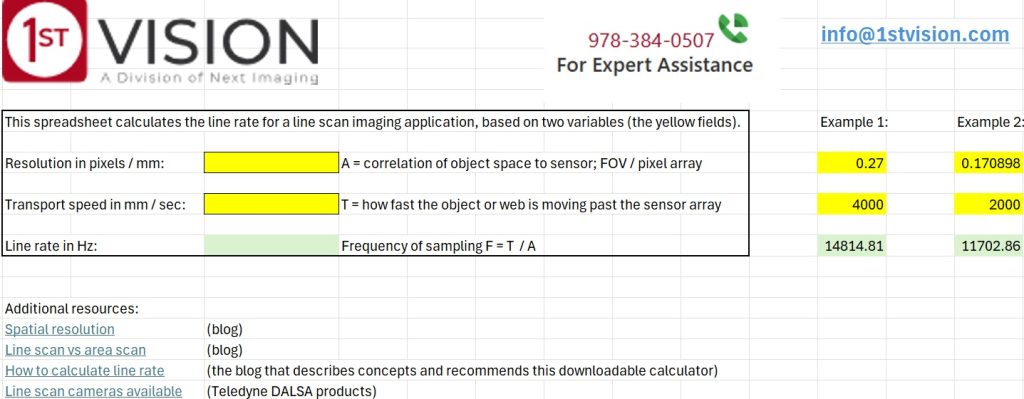

Download spreadsheet with labeled fields and examples:

Just click here, or on the image below, to download the spreadsheet calculator. It includes clearly labeled fields, and examples, as the companion piece for this blog:

Not included here… but happy to show you how

We’ve kept this blog intentionally lean, to avoid information overload. Additional values may also be calculated, of course, such as:

Data rate in MB / sec: Useful to confirm camera interface can sustain the data rate

Frame time: The amount of time to process each scanned image. Important to be sure the PC and image processing software are up to the task – based on empirical experience or by conferring with software provider.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about